Deepfake Evidence in Criminal Cases

If you’re facing criminal accusations in Miami today, you’re dealing with a world where videos, texts, and audio recordings play an outsized role in shaping a case. Prosecutors rely on digital material more than ever, and jurors tend to give visual evidence enormous weight. But deepfake technology has changed that entire dynamic, and not always in ways the average person sees coming.

As criminal lawyers in Miami, we’re beginning to see cases where digital evidence raises authenticity questions that didn’t exist a few years ago. While manipulated video and audio aren’t yet commonplace in Miami courtrooms, the technology exists and is becoming more accessible. We’ve handled cases where the reliability of digital evidence becomes central to the defense, and we’re preparing for situations where prosecutors may present—or defendants may be accused of creating—synthetic or altered media. In an increasing number of cases, the core question is whether footage is genuine, edited, mislabeled, or artificially generated.

The challenge you face is simple: deepfakes can make innocent people look guilty, and they make authentic evidence harder to trust. Our job is to help you understand what this means for your defense and how to respond when the prosecution leans on digital material that may not be authentic.

This post explains how deepfake evidence can arise in a criminal case, the authentication standards courts apply, why those standards are under strain, and the strategies we use to expose manipulated content.

What Counts as a Deepfake Under Florida Law?

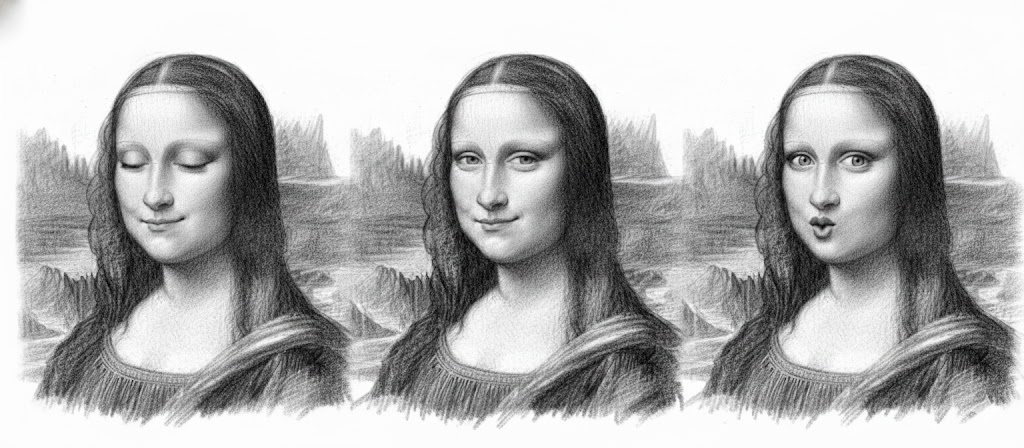

Deepfakes usually refer to media created or altered using artificial intelligence—typically through machine-learning models that generate extremely convincing videos, images, or audio. Some deepfakes swap a person’s face. Others fabricate conversations from scratch. And increasingly, synthetic voice models can mimic tone, cadence, and speech patterns with shocking accuracy.

Florida doesn’t have a comprehensive statute that defines “deepfake” as a formal legal term across all criminal contexts, though specific laws do address certain uses of digitally altered content—particularly Florida Statute § 784.049, which criminalizes sexual cyberharassment involving altered sexual images. For most criminal cases, courts rely on traditional rules of evidence, primarily authentication, foundation, and chain of custody, to determine whether a video or audio clip can be used at trial.

That absence of comprehensive statutory guidance makes the defense role even more important. If prosecutors offer digital evidence, we are entitled to question every part of it:

- Who created it?

- Who had access to it?

- Was the file edited?

- What metadata supports or contradicts the State’s story?

- Does the clip show actual events, or something generated or modified by AI?

Deepfakes thrive where these questions go unanswered.

Why Deepfake Evidence Poses Such a Threat to Defendants

If you’re a defendant, you’re operating in an environment where one convincing video can shape public opinion, influence bail decisions, and even pressure someone into a plea before the evidence is tested.

Here’s what makes deepfakes so dangerous:

1. They are becoming difficult for the untrained eye—and even trained experts—to detect.

AI models evolve constantly. What was easy to expose two years ago may pass basic scrutiny today. Detection methods are in a continuous arms race with creation technology, and what works today may not work tomorrow.

2. They exploit jurors’ trust in visual evidence.

People tend to believe what they can see and hear. Even if an expert later explains that a video is unreliable, the first impression is hard to erase.

3. They may blend real and synthetic elements.

A clip doesn’t need to be completely fabricated to mislead. Even subtle modifications—audio splicing, face replacement, or time-frame alteration—can change the narrative entirely.

4. They complicate the idea of “digital fingerprints.”

Traditional methods of verifying photos and videos no longer guarantee authenticity. AI tools can remove or alter metadata, leaving no obvious trace. Conversely, the absence of expected metadata—such as standard camera information or creation timestamps—can itself be a red flag that something has been manipulated.

For someone charged with a crime, the presence of synthetic media raises serious concerns about fairness and due process. That’s why authentication is now one of the most important battlegrounds in digital-evidence litigation.

How Courts Traditionally Authenticate Digital Evidence

Under the Florida Evidence Code, the proponent of digital evidence must show that the material is what they claim it is. Historically, that could be done through:

- Witness testimony – Someone with firsthand knowledge confirms that the footage accurately depicts the events.

- Metadata and file-creation information – Timestamps, device IDs, and file-history logs help establish authenticity.

- Chain of custody – The prosecution must show that the evidence hasn’t been altered while in the State’s possession.

- Expert analysis – A forensic expert can verify whether a recording shows signs of editing or manipulation.

For decades, these methods worked well. Deepfakes challenge every one of them.

Why Traditional Authentication Standards Don’t Fit Deepfakes

1. Human witnesses are unreliable against AI-created media.

A witness may think a video “looks right,” but deepfakes are designed to fool human perception. If someone genuinely believes an event happened, they may confirm authenticity even when the clip is fabricated.

2. Metadata can be forged or scrubbed.

Deepfake tools routinely allow metadata removal or replacement. Many AI-generated files contain metadata indistinguishable from natural recordings. However, missing or anomalous metadata can also be valuable evidence for the defense.

3. Chain of custody may not reveal pre-existing manipulation.

If a clip was already modified before the police received it, the chain of custody doesn’t help.

4. Some deepfakes leave no obvious forensic artifacts.

Advanced models can produce content that avoids older detection methods such as pixel-level anomaly analysis or inconsistent lighting cues.

5. Social media sources destroy forensic integrity.

When videos are uploaded to social media platforms, they’re typically re-encoded and compressed, which destroys the forensic artifacts that experts rely on to detect manipulation. This makes authentication far more difficult, regardless of whether the content is genuine or fabricated.

The result is a widening gap between what the law expects and what technology now enables. And that gap can put defendants at risk unless their lawyers understand how to challenge digital authentication.

Defense Strategies to Attack Deepfake Evidence

When the State introduces a recording or a video in a Miami criminal case, we approach it with skepticism—not because every piece of evidence is fabricated, but because every digital file deserves scrutiny. Here are the primary strategies we use when deepfake manipulation is a realistic concern.

1. Demand strict authentication, not assumptions

We force prosecutors to meet their burden. That includes:

- Identifying the original source

- Producing the entire file history

- Explaining how the recording was obtained

- Providing device information

- Producing witness testimony that actually connects the defendant to the alleged events

If the State cannot show precisely where the file came from and how it remained unaltered, that weakness becomes a point of attack.

2. Raise objections under Florida’s Evidence Code and due process protections

A deepfake creates major evidentiary problems: manufactured recordings lack reliability and can violate the right to a fair trial by presenting fundamentally misleading evidence to the jury. We file motions to exclude evidence that lacks sufficient foundation, reliability, or that is substantially more prejudicial than probative under Florida Evidence Code § 90.403. Synthetic videos and AI-generated audio fall squarely into that category when authentication is questionable.

3. Use forensic experts who specialize in deepfake detection

There is an entirely new class of forensic analysts trained to spot AI-generated content. They examine:

- Facial-movement inconsistencies

- Speech-pattern irregularities

- Compression signatures

- Frame-level artifacts

- Neural-network generation patterns

- Mismatches in shadows, reflections, and lighting physics

Experts also analyze the surrounding digital ecosystem: the devices, platforms, and transmission methods that may reveal how a file was altered.

It’s important to understand that deepfake detection is a rapidly evolving field where detection capabilities are constantly racing against increasingly sophisticated creation methods. What an expert can detect today may be harder to spot in next year’s AI models. That uncertainty itself can create reasonable doubt.

Their testimony can expose weaknesses that prosecutors prefer to gloss over.

4. Challenge the file’s chain of custody and the State’s digital-handling practices

We don’t take “the officer downloaded it from a phone” at face value. We examine:

- Who handled the device

- Whether forensic imaging was performed properly

- Whether the device contained editing apps

- Whether the video came from social media (which destroys forensic artifacts through re-encoding)

- Whether timestamps align with other evidence

- Whether the clip appears compressed or re-encoded

Deepfakes often slip into the record because no one questions these details. We do.

5. Present alternative explanations that create reasonable doubt

Even if a video appears to show you doing something illegal, we highlight:

- The increasing availability of deepfake apps

- The possibility that someone else generated the clip

- The absence of corroborating evidence

- The technological difficulty of distinguishing real from synthetic footage

When the authenticity is in legitimate dispute, Florida law requires that doubt to benefit the defendant.

When the Accusation Is That You Created a Deepfake

Sometimes our clients aren’t dealing with fabricated evidence against them—they’re charged with producing deepfakes themselves. This often arises in cases involving:

- Cyberstalking

- Sexual cyberharassment under Florida Statute § 784.049

- Harassment

- Fraud or impersonation

- Extortion

Here, the challenge is different. The State may argue that a match between your IP address, device, or social-media account and an uploaded file proves you made it. But deepfake creators often use:

- VPNs

- Spoofed accounts

- AI tools accessible from public networks

- Devices that can’t conclusively identify a user

We push back hard against assumptions about authorship. If the prosecution cannot show beyond a reasonable doubt that you created the synthetic content, their case weakens dramatically.

Why You Should Never Assume Digital Evidence Is “Game Over”

We meet many clients who believe that a video or audio clip means they have no defense. Deepfakes shift that equation. What the prosecution thinks is proof may actually be a manipulated file, a misinterpretation, or a piece of content lacking proper foundation.

Even authentic media can be misleading:

- A clip may lack context

- Audio may be incomplete

- Time stamps may be inaccurate

- Footage may be edited to remove exonerating moments

The court doesn’t simply accept digital evidence because it exists. It must be authentic, reliable, and legally admissible. Deepfakes make that standard harder for the State to meet—and easier for us to challenge.

How We Approach Deepfake-Related Issues in Miami Criminal Defense

Our firm treats digital evidence as one of the most powerful—and most dangerous—forces in modern criminal cases. When deepfake concerns arise, we:

- Interview witnesses early while memories are fresh

- Demand complete discovery, including raw files and metadata

- Retain forensic experts who understand AI-generated media

- Analyze device data through independent digital examiners

- File motions to suppress unreliable or prejudicial content

- Prepare to educate jurors on how deepfakes distort reality

Most importantly, we make sure you’re not steamrolled by technology that the legal system is still catching up to.

If Deepfake Evidence Is Part of Your Case, You Need Defense Lawyers Who Understand It

Deepfakes aren’t a futuristic problem—they’re here now, shaping real cases, real arrests, and real courtroom outcomes. Whether you’re accused of something you didn’t do or charged with creating content you never touched, you’re facing a system that often struggles to tell authentic evidence from synthetic media.

We stay ahead of these developments because your freedom depends on it.

If you’re dealing with a case where digital evidence is being used against you—or you suspect that something has been altered, fabricated, or misrepresented—we’re ready to fight for you with the technical knowledge and courtroom strategy these cases demand.

CALL US NOW for a CONFIDENTIAL INITIAL CONSULTATION at (305) 538-4545, or take a moment to fill out our confidential and secure intake form.* The additional details you provide will greatly assist us in responding to your inquiry.

*Due to the large number of inquiries we receive, providing specific details about your case helps us respond promptly and appropriately.

THERE ARE THOUSANDS OF LAW FIRMS AND ATTORNEYS IN SOUTH FLORIDA. ALWAYS INVESTIGATE A LAWYER’S QUALIFICATIONS AND EXPERIENCE BEFORE MAKING A DECISION ON HIRING A CRIMINAL DEFENSE ATTORNEY FOR YOUR MIAMI-DADE COUNTY CASE.